Mistral launches API for building plug-and-play AI agents

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

The well-funded and innovative French AI startup Mistral AI is introducing a new service for enterprise customers and independent software developers alike.

Mistral’s Agents application programming interface (API) allows third-party software developers to easily and rapidly add autonomous generative AI capabilities — such as pulling information securely from enterprise documents — to their existing enterprise and independent applications using the newest Mistral proprietary model, Medium 3, as the “brains” of each agent.

It’s essentially designed to be a “plug and play” platform, with nearly limitless customization, for getting AI agents up and running to handle enterprise and developer workflows.

Designed to complement Mistral’s existing Chat Completion API, this latest release focuses on agentic orchestration, built-in connectors, persistent memory, and the flexibility to coordinate multiple AI agents to tackle complex tasks.

Surpassing the limits of typical LLMs…

While traditional language models excel at generating text, they often fall short in executing actions or maintaining conversational context over time.

Mistral’s Agents API addresses these limitations by providing developers with the tools to create AI agents capable of performing real-world tasks, managing interactions across conversations, and dynamically orchestrating multiple agents when needed.

The Agents API comes equipped with several built-in connectors, including:

- Code Execution: Securely runs Python code, enabling applications in data visualization, scientific computing, and other technical tasks.

- Image Generation: Leverages Black Forest Lab FLUX1.1 [pro] Ultra to create custom visuals for marketing, education, or artistic uses.

- Document Library: Accesses documents stored in Mistral Cloud, enhancing retrieval-augmented generation (RAG) features.

- Web Search: Allows agents to retrieve up-to-date information from online sources, news outlets, and other reputable platforms.

The API also supports MCP tools, which connect agents to external resources like APIs, databases, user data, and documents—extending the agents’ abilities to handle dynamic, real-world content.

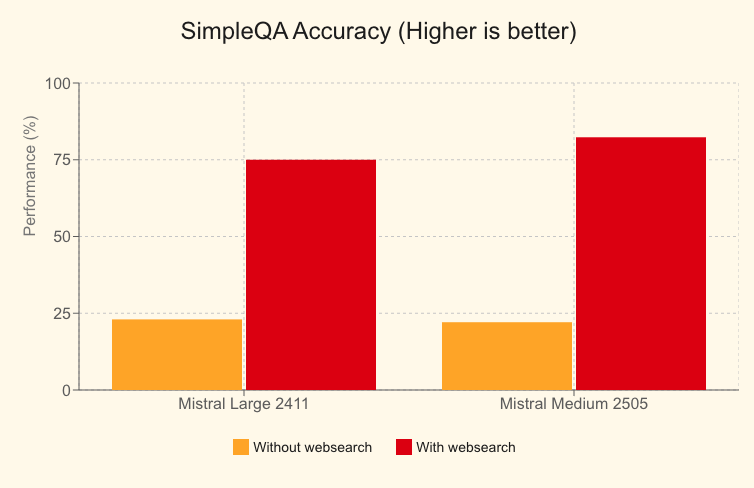

Enhanced accuracy using web search

One significant feature of the Agents API is the integration of web search as a connector, which notably improves performance on tasks requiring accurate, up-to-date information.

In benchmark testing on the SimpleQA dataset, Mistral Large’s accuracy rose from 23% to 75% when web search was enabled. Mistral Medium showed a similar improvement, increasing from 22.08% to 82.32%.

Real-world use cases

Mistral AI has highlighted a range of use cases for the Agents API, demonstrating its flexibility across multiple sectors:

- Coding Assistant with GitHub: An agent oversees a developer assistant powered by DevStral, managing tasks and automating code development workflows.

- Linear Tickets Assistant: Transforms call transcripts into project deliverables using multi-server MCP architecture.

- Financial Analyst: Sources financial metrics and securely compiles reports through orchestrated MCP servers.

- Travel Assistant: Helps users plan trips, book accommodations, and manage travel needs.

- Nutrition Assistant: Supports users in setting dietary goals, logging meals, and receiving personalized recommendations.

Managing context and conversations

The Agents API’s stateful conversation system ensures that agents maintain context throughout their interactions. Developers can start new conversations or continue existing ones without losing the thread, with conversation history stored and accessible for future use.

Additionally, the API supports streaming output, enabling real-time updates in response to user requests or agent actions.

Dynamic orchestration of multiple agents

A core capability of the Agents API is its ability to coordinate multiple agents seamlessly. Developers can create customized workflows, assigning specific tasks to specialized agents and enabling handoffs as needed. This modular approach allows enterprises to deploy AI agents that work together to solve complex problems more effectively.

What the Mistral Agents API means for enterprise technical decision-makers

For professionals like the Lead AI Engineer or Senior AI Engineer, the Mistral Agents API represents a powerful addition to their AI toolkit.

The ability to dynamically orchestrate agents and seamlessly integrate real-world data sources means these roles can deploy AI solutions faster and with greater precision—critical in environments where quick iteration and performance tuning are paramount.

Specifically, these professionals often balance tight deployment timelines and the need to maintain model performance across different environments.

The Agents API’s built-in connectors—like web search, document libraries, and secure code execution—can significantly reduce the need for ad hoc integrations and patchwork tooling. This streamlined approach saves time and lowers friction, allowing teams to focus more on fine-tuning models and less on building surrounding infrastructure.

Moreover, stateful conversation management and real-time updates through streaming output align well with the demands of AI orchestration and deployment. These features make it easier for engineers to maintain context across iterations and ensure consistent, high-quality interactions with end users.

For those responsible for introducing and integrating new AI tools into organizational workflows, the MCP tool support also ensures that agents can access data from a wide range of APIs and systems, further enhancing operational efficiency.

Continuing to bolster Mistral’s enterprise AI push

The release of the Agents API follows Mistral AI’s recent launch of Le Chat Enterprise, a unified AI assistant platform designed for enterprise productivity and data privacy. Le Chat Enterprise is powered by the new Mistral Medium 3 model, which offers impressive performance at a lower computational cost than larger models.

Mistral Medium 3 is particularly strong in software development tasks, outperforming comparable models in key coding benchmarks like HumanEval and MultiPL-E. It also shows competitive performance in multilingual and multimodal scenarios, making it an attractive option for businesses operating in diverse environments.

Le Chat Enterprise supports enterprise-grade features such as data sovereignty, hybrid deployment, and strict access controls, which can be crucial for organizations in regulated sectors. The platform consolidates AI functionality within a single environment, enabling customization, seamless integration with existing workflows, and full control over deployment and data security.

But it’s another proprietary service

Mistral’s earlier releases, like Mistral 7B, were open source and widely embraced by the developer community for their transparency and flexibility.

However, Mistral Medium 3 is a proprietary model—requiring access through Mistral’s platform, APIs, or partners—and is no longer available under an open-source license.

This shift has caused some frustration in the AI community, where open access and transparency are highly valued for experimentation and customization.

The Agents API itself also follows a proprietary framework: it is not available under an open-source license and is managed exclusively by Mistral, with access available via subscription and API calls.

Pricing and availability

Pricing for the Agents API aligns with Mistral’s broader suite of models and tools:

- Mistral Medium 3: $0.4 per million input tokens and $2 per million output tokens.

- Web Search Connector: $30 per 1,000 calls.

- Code Execution: $30 per 1,000 calls.

- Image Generation: $100 per 1,000 images.

- Premium News Access: $50 per 1,000 calls.

- Document Library with RAG: Included in plans like Team and Enterprise, with up to 30GB per user in some tiers.

- Custom connectors, audit logs, SAML SSO, and other enterprise features: Available in Team and Enterprise plans (pricing typically requires contacting Mistral’s sales team).

For developers and enterprise customers, these costs can add up quickly—making budget considerations and careful integration planning essential.

Looking ahead

Mistral AI positions its Agents API as the backbone of enterprise-grade agentic platforms, empowering developers to create solutions that move beyond traditional text generation.

Despite the community debate around open source versus proprietary access, Mistral’s focus on enterprise-grade features, customizable workflows, and secure integrations positions this API as a significant option for businesses seeking advanced AI capabilities.

For developers and technical decision makers, the question will be whether the proprietary nature of the Agents API and the underlying models aligns with their own operational and budgetary needs. For those who prioritize rapid deployment, managed services, and full integration with enterprise systems, Mistral’s evolving platform could offer significant advantages.

For more information or to get started, Mistral AI encourages developers to explore the provided documentation and demos.

Let me know if you’d like to expand on the open-source versus proprietary discussion even more, or if you’d like to highlight another perspective!