Moonvalley’s Marey is a state-of-the-art AI video model trained on FULLY LICENSED data

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

A few years ago, there was no such thing as a “generative AI video model.”

Today, there are dozens, including many capable of rendering ultra high-definition, ultra-realistic Hollywood-caliber video in seconds from text prompts or user-uploaded images and existing video clips. If you’ve read VentureBeat in the last few months, you’ve no doubt come across articles about these models and the companies behind them, from Runway’s Gen-3 to Google’s Veo 2 to OpenAI’s long-delayed but finally available Sora to Luma AI, Pika, and Chinese upstarts Kling and Hailuo. Even Alibaba and a startup called Genmo have offered open source versions.

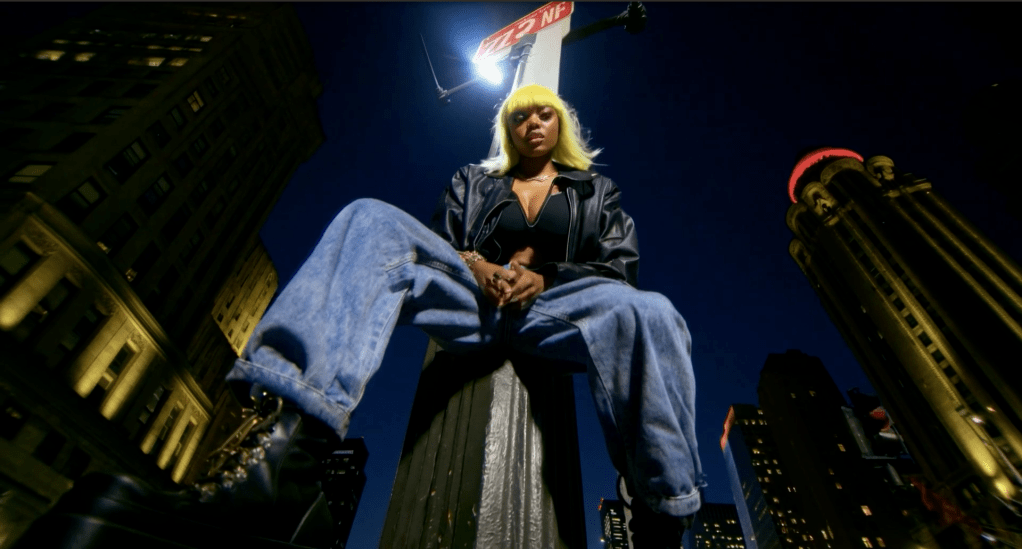

Already, they’ve been used to make portions of major blockbusters from Everything, Everywhere All At Once to HBO’s True Detective: Night Country to music videos and TV commercials by Toys R’ Us and Coca Cola. But despite Hollywood and filmmakers’ relatively rapid embrace of AI, there’s still one big potential looming issue: copyright concerns.

As best as we can tell given that most of these AI video model startups don’t publicly share precise details of their training data, most are trained on vast swaths of videos uploaded to the web or collected from other archival sources, including those with copyrights who may or may not have actually granted express permission to the AI video companies to train on them. In fact, Runway is among the companies facing down a class action lawsuit (still working its way through the courts), over this very issue, and Nvidia reportedly scraped a huge swath of YouTube videos as well for this purpose. The dispute is ongoing — whether scraping data including videos constitutes fair and transformational use, or not.

But now there’s a new alternative for those concerned about copyright and aren’t wanting to use models where there is a question mark: a startup called Moonvalley — founded by former Google DeepMinders and researchers from Meta, Microsoft and TikTok, among others — has introduced Marey, a generative AI video model designed for Hollywood studios, filmmakers, and enterprise brands. Positioned as a “clean” state-of-the-art foundational AI video model, Marey is trained exclusively on owned and licensed data, offering an ethical alternative to AI models developed using scraped content.

“People said it wasn’t technically feasible to build a cutting-edge AI video model without using scraped data,” said Moonvalley CEO and co-founder Naeem Talukdar, in a recent video call interview with VentureBeat. “We proved otherwise.”

Marey, available now on an invitation-only waitlist basis, joins Adobe’s Firefly Video model, which that long established software vendor also says is also enterprise-grade — having been trained only on licensed data and Adobe Stock data (to the controversy of some contributors) — and provides enterprises indemnification for using. Moonvalley also provides indemnification on clause 7 of this document, saying it will defend its customers at its own expense.

Moonvalley is hoping these features will make Marey appealing to big studios — even as others such as Runway make deals with them — and filmmakers, over the countless and ever-growing array of new AI video creation options.

More ‘ethical’ AI video?

Marey is the result of a collaboration between Moonvalley and Asteria, an artist-led AI film and animation studio. The model is built to assist rather than replace creative professionals, providing filmmakers with new tools for AI-driven video production while maintaining traditional industry standards.

“Our conviction was that you’re not going to get mainstream adoption in this industry unless you do this with the industry,” Talukdar said. “The industry has been loud and clear that in order for them to actually use these models, we need to figure out how to build a clean model. And up until today, the top track was you couldn’t do it.”

Rather than scraping the internet for content, Moonvalley built direct relationships with these creators to license their footage. The company took several months to establish these partnerships, ensuring all data used for training was legally acquired and fully licensed.

Moonvalley’s licensing strategy is also designed to support content creators by compensating them for their contributions.

“Most of our relationships are actually coming inbound now that people have started to hear about what we’re doing,” Talukdar said. “For small-town creators, a lot of their footage is just sitting around. We want to help them monetize it, and we want to do artist-focused models. It ends up being a very good relationship.”

Talukdar told VentureBeat that while the company is still assessing and revising its compensation models, it generally compensates creators based on the duration of their footage, paying them an hourly or minutely rate under fixed-term licensing agreements (e.g., 12 or 4 months). This allows for potential recurring payments if the content continues to be used.

The company’s goal is to make high-end video production more accessible and cost-effective, allowing filmmakers, studios, and advertisers to explore AI-generated storytelling without legal or ethical concerns.

More cinematographic control beyond text prompts, images, and camera directions

Talukdar explained that Moonvalley took a different approach with its Marey AI video model from existing AI video models by focusing on professional-grade production rather than consumer applications.

“Most generative video companies today are more consumer-focused,” he said. “They build simple models where you prompt a chatbot, generate some clips, and add cool effects. Our focus is different—what’s the technology needed for Hollywood studios? What do major brands need to make Super Bowl commercials?”

Marey introduces several advancements in AI-generated video, including:

- Native HD Generation – Generates high-definition video without relying on upscaling, reducing visual artifacts.

- Extended Video Length – Unlike most AI video models, which generate only a few seconds of footage, Marey can create 30-second sequences in a single pass.

- Layer-Based Editing – Unlike other generative video models, Marey allows users to separately edit the foreground, midground, and background, providing more precise control over video composition.

- Storyboard & Sketch-Based Inputs – Instead of relying only on text prompts (which many AI models do), Marey enables filmmakers to create using storyboards, sketches, and even live-action references, making it more intuitive for professionals.

- More Responsive to Conditioning Inputs – The model was designed to better interpret external inputs like drawings and motion references, making AI-generated video more controllable.

- “Generative-Native” Video Editor – Moonvalley is developing companion software alongside Marey, which functions as a generative-native video editing tool that helps users manage projects and timelines more effectively.

“The model itself is just built very heavily around controllability,” Talukdar explained. “You need to have significantly more controls around the output—being able to change the characters. It’s the first model that allows you to do layer-based editing, so you can edit the foreground, mid-ground, and background separately. It’s also the first model built for Hollywood, purpose-built for production.”

In addition, he told VentureBeat that Marey relies on a Diffusion-Transformer Hybrid Model that combines diffusion and transformer-based architectures.

“The models are diffusion-transformer models, so it’s the transformer architecture, and then you have diffusion as part of the layers,” Talukdar said. “When you introduce controllability, it’s usually through those layers that you do it.”

Funded by big name VCs but not as much as other AI video startups (yet)

Moonvalley is also this week announcing a $70 million seed round led by Bessemer Venture Partners, Khosla Ventures, and General Catalyst. Investors Hemant Taneja, Samir Kaul, and Byron Deeter have also joined the company’s board of directors.

Talukdar noted that Moonvalley’s funding is substantially less than some of its competitors, so far — Runway is reported to have raised $270 million total across several rounds — but optimized its resources by assembling an elite team of AI researchers and engineers.

“We raised around $70 million, quite a bit less than our competitors, certainly,” he said. “But that really boils down to the team—having a team that can build that architecture significantly more efficiently, compute, and all those different things.”

Marey is currently in a limited-access phase, with select studios and filmmakers testing the model. Moonvalley plans to gradually expand access over the coming weeks.

“Right now, there’s a number of studios that are getting access to it, and we have an alpha group with a couple dozen filmmakers using it,” Talukdar confirmed. “The hope is that it’ll be fully available within a couple of weeks, worst case within a couple of months.”

With the launch of Marey, Moonvalley and Asteria aim to position themselves at the forefront of AI-assisted filmmaking, offering studios and brands a solution that integrates AI without compromising creative integrity. But with AI video startup rivals such as Runway, Pika, and Hedra continuing to add new features like character voice and movements.