Sakana AI Introduces Text-to-LoRA (T2L): A Hypernetwork that Generates Task-Specific LLM Adapters (LoRAs) based on a Text Description of the Task

Transformer models have significantly influenced how AI systems approach tasks in natural language understanding, translation, and reasoning. These large-scale models, particularly large language models (LLMs), have grown in size and complexity to the point where they encompass broad capabilities across various domains. However, applying these models to new, specialized tasks remains a complex operation. Each new application typically demands careful dataset selection, hours of fine-tuning, and a high degree of computational power. Although these models offer a strong foundation in knowledge, their rigidity in handling new domains with minimal data remains a core limitation. As researchers aim to bring AI closer to human-like adaptability, the focus has shifted toward more efficient methods that allow such models to modify their behavior without retraining every parameter.

The Challenge of Customizing LLMs for New Tasks

The central difficulty lies in adapting foundation models to unique applications without repeating costly and time-intensive training cycles. Most solutions today rely on creating new adapters for each task, which are separate components trained to steer the model’s behavior. These adapters must be made from scratch for every task, and any benefits learned from one application often cannot be transferred to another. This adaptation process is time-consuming and lacks scalability. Moreover, tuning models on specific datasets usually requires a high level of precision in hyperparameter choices, and failing to find the right configuration can lead to poor results. Even when adaptation is successful, the result is often a large collection of isolated task-specific components that are not easy to integrate or reuse.

In response to these limitations, researchers have adopted Low-Rank Adaptation (LoRA), a technique that modifies only a small set of parameters rather than the entire model. LoRA injects low-rank matrices into specific layers of a frozen LLM, allowing the base weights to remain unchanged while enabling task-specific customization. This method reduces the number of trainable parameters. However, for each task, a new LoRA adapter still needs to be trained from scratch. While more efficient than full fine-tuning, this method does not allow for fast, on-the-fly adaptation. Recent advancements have attempted to compress these adapters further or combine multiple adapters during inference; however, they still rely heavily on prior training and cannot generate new adapters dynamically.

Introducing Text-to-LoRA: Instant Adapter Generation from Task Descriptions

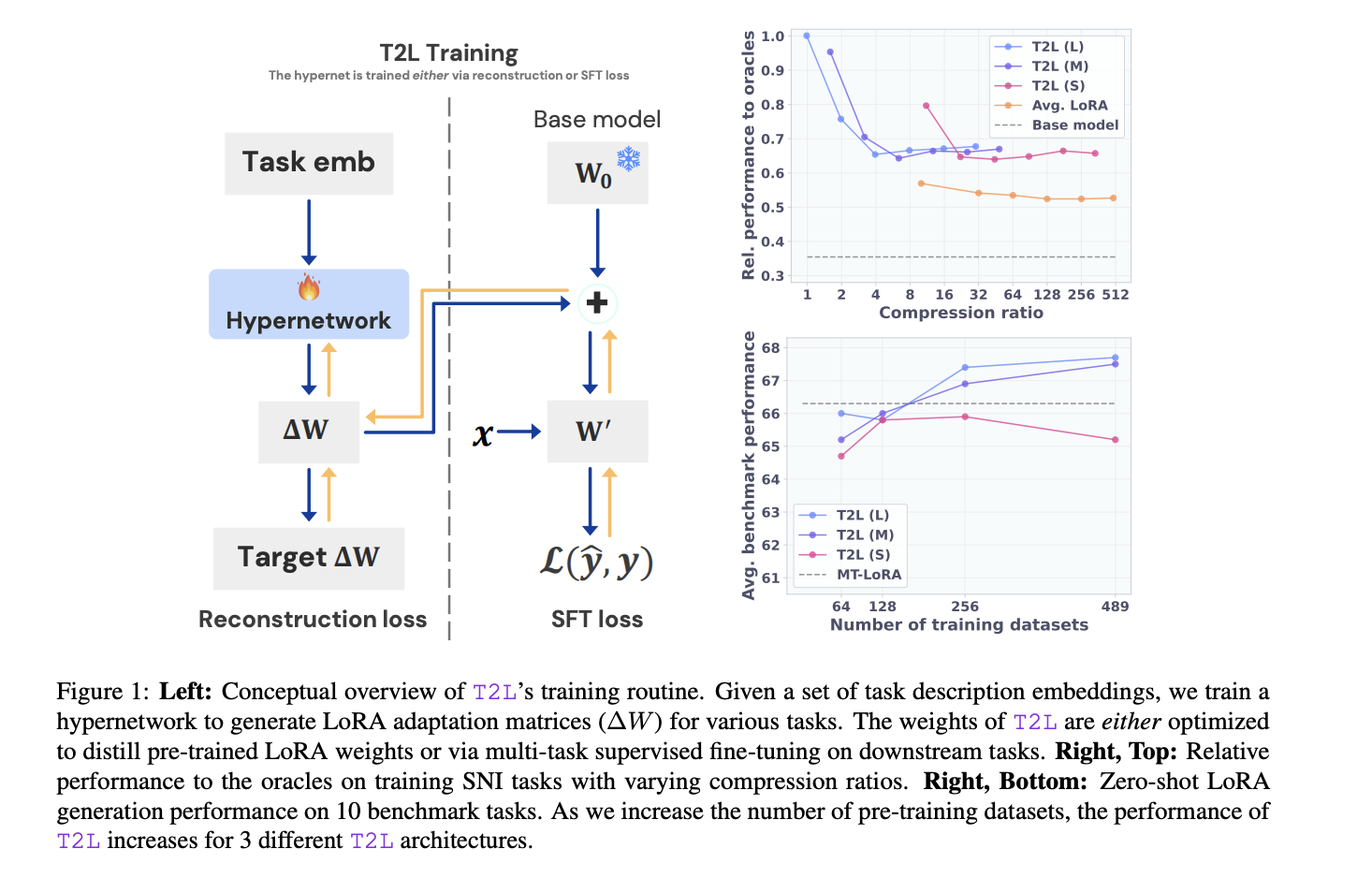

Researchers at Sakana AI introduced Text-to-LoRA (T2L), designed to instantly generate task-specific LoRA adapters from textual descriptions of the target task, instead of creating and training new adapters for each task. T2L functions as a hypernetwork capable of outputting adapter weights in a single forward pass. It learns from a library of pre-existing LoRA adapters covering various domains, including GSM8K, Arc-challenge, BoolQ, and others. Once trained, T2L can interpret a task’s description and generate the required adapter without additional training. This ability not only eliminates the need for manual adapter generation but also enables the system to generalize to tasks it has never encountered before.

The T2L architecture uses a combination of module-specific and layer-specific embeddings to guide the generation process. Three architectural variants were tested: a large version with 55 million parameters, a medium with 34 million, and a small with just 5 million. Despite their differences in size, all models were capable of generating the necessary low-rank matrices for adapter functionality. The training utilized the Super Natural Instructions dataset across 479 tasks, with each task described in natural language and encoded into vector form. By merging these descriptions with learned layer and module embeddings, T2L creates the low-rank A and B matrices needed for adapter functionality. This allows one model to replace hundreds of hand-crafted LoRAs, producing consistent results with a much smaller computational footprint.

Benchmark Performance and Scalability of T2L

On benchmarks such as Arc-easy and GSM8K, T2L matched or surpassed the performance of task-specific LoRAs. For instance, the accuracy on Arc-easy using T2L was 76.6%, matching the accuracy of the best manually tuned adapter. On BoolQ, it reached 89.9%, slightly outperforming the original adapter. Even on more difficult benchmarks like PIQA and Winogrande, where overfitting typically hurts performance, T2L delivered better results than manually trained adapters. These improvements are believed to stem from the lossy compression inherent in the hypernetwork training, which acts as a form of regularization. When increasing the number of training datasets from 16 to 479, the performance in zero-shot settings improved substantially, showing T2L’s capability to generalize with broader exposure during training.

Several Key Takeaways from the Research include:

- T2L allows instant adaptation of LLMs using only natural language descriptions.

- It supports zero-shot generalization to tasks not seen during training.

- Three architectural variants of T2L were tested with parameter counts of 55M, 34M, and 5M.

- Benchmarks include ArcE, BoolQ, GSM8K, Hellaswag, PIQA, MBPP, and more.

- T2L achieved benchmark accuracies of 76.6% (ArcE), 89.9% (BoolQ), and 92.6% (Hellaswag).

- It matched or exceeded manually trained LoRAs in performance on multiple tasks.

- Trained using 479 tasks from the Super Natural Instructions dataset.

- T2L uses the gte-large-en-v1.5 model for generating task embeddings.

- LoRA adapters produced by T2L target only query and value projections in attention blocks, totaling 3.4M parameters.

- Performance remained consistent even with higher reconstruction loss, showing resilience to compression.

In conclusion, this research highlights a major step forward in flexible and efficient model adaptation. Instead of relying on repetitive, resource-heavy procedures, T2L uses natural language itself as a control mechanism, enabling models to specialize using simple task descriptions. This capability dramatically reduces the time and cost required to adapt LLMs to new domains. Moreover, it suggests that as long as enough prior adapters are available for training, future models could potentially adapt in seconds to any task described in plain English. The use of hypernetworks to dynamically construct adapters also means less storage is needed for model specialization, further increasing the practicality of this method in production environments.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.